The subject of artificial intelligence is a strange one when you stop to think about it. You could say it’s been around in various forms for a while, but what we consider modern AI, or language learning models (LLMs), something we’d recognize as being AI in the sense we’ve envisioned for decades, has only recently arrived on the scene relatively recently.

But the concept of AI has been around for ages. Machines acting human-like is a very old concept we’ve seen in Hollywood repeatedly. Rosie from “The Jetsons,” R2-D2 and C-3P0 from Star Wars, Kitt from “Knight Rider,” Johnny 5 from “Short Circuit,” and Bender from “Futurama.” These are just a few of the myriad of AI characters we’ve dreamed up over the course of decades and decades.

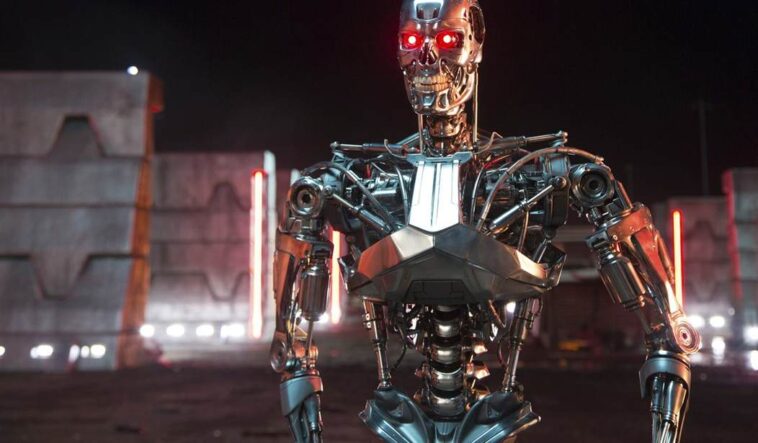

As fun as it was to talk about AI companions, there was also the horror aspect of AI. Machines are clearly stronger, and computers are obviously faster… so what happens when AI gets advanced enough to the point where it just decides it doesn’t need us. This question spawned its own versions of AI where the machines turned evil and ruthlessly dominated humanity. “The Matrix,” “Terminator,” and, probably the most frightening of them all, AM from “I Have No Mouth and I Must Scream.”

With AI now becoming a huge part of every day life in the real world, the thought of the evil AI is on a lot of people’s minds. Various articles bring this up when discussing this tech, and you can even see the concern in the comments section of this website whenever AI is written about. Inevitably, someone mentions “Skynet.”

But will AI destroy humanity?

Anything is possible… but it’s highly unlikely. All the Hollywood depictions of out-of-control, independently deadly AI is just that; Hollywood depictions.

Not only is AI highly likely not to kill us off, it may be integral to our survival as a species, especially as we move further into being a space-faring one.

First thing’s first. Why won’t AI kill us? Because we won’t program it to. While I’m sure someone out there with the know-how could program a malicious AI — and I have no doubt that will actually happen — the likelihood of it getting too out of control is low simply due to the fact that, by that point, counter-systems will have been developed using beneficial AI. Much like a computer virus is stopped by antivirus software, there will be AI out there whose entire job is to protect systems and people.

But the most common fear is one of an AI system becoming self-aware and deciding it doesn’t need humans anymore.

Let’s say an AI system does become self-aware. There is no real logical reason for it to want to eliminate all of humanity. An AI does not want. It does not desire resources. It does not consume or reproduce. It has no drive to. It’s a machine.

We attribute these things to an AI because that’s in OUR nature, and since we are the most dominant and intelligent species on the planet, we naturally attribute that nature to whoever would become more advanced than us, but that’s just not the case. Human nature and AI nature are not the same. AI has no biological drives, and thus its priorities wouldn’t be similar to ours. Humans covet and consume, they fight for resources, mates, and dominance. An AI has no need for any of that.

Moreover, there is an entire field of research looking into AI ethics as I write this. There is Asimov’s “Three Laws,” of course:

1. A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2. A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Even outside these embedded ethical constraints, value alignment and Human-in-the-Loop safeguards are being developed constantly. AI might not be a clear and present danger to us as a rogue element, but it is a powerful tool that can and will be used as a weapon, and as such, parameters must be set by its human creators.

This brings me to the threat AI actually can pose, and that’s acting on the behest of very biased masters. I’ve already written about how Democrats have eyed AI as a tool of information control. Google has also demonstrated its willingness to utilize AI to push radical agendas as well.

(READ: The Democrats Are Moving to Regulate AI and Naturally, It Threatens Free Speech)

(READ: Yes, Google Gemini’s Creator Is, in Fact, Racist Against White People)

Understand, however, that this is still a human problem, not an AI one, and proof that there needs to be far more oversight and an abundance of caution when programming AI that would be consequential to humans in everyday affairs.

Honestly, the biggest threat to humans posed by AI is, at least currently, the romantic AI companion, which I won’t go into in this article too much as I’ve written about it quite a bit already. Suffice to say, it could contribute to a birth rate decrease in the first world.

(READ: The Intriguing but Dangerous Future of AI Companionship)

There is also the threat of AI taking human jobs, which is already starting to happen in various ways. My friend and colleague Ward Clark wrote a piece describing how AI could threaten 10 percent of our workforce.

All of these are issues AI could cause, but none of these threaten humanity as a species in an apocalyptic way. These are more like problems we need to solve in order to live more comfortably with AI.

And live with AI we will. There’s no putting that cat back in the bag now. Future generations will grow alongside AI, and not know a world without. My one-year-old son, for instance, will probably be of the generation where AI robots in the home are as normal as dogs.

(READ: Gen Alpha Will Be the AI Generation)

AI will likely become such a normalized part of the human experience that the most likely path of AI development will actually be one of merging with humans in various ways. As I wrote in the past, technopathy is technology of tomorrow that’s already being put into practice today. Elon Musk’s “Neuralink,” a brain-computer interface, for instance, allows people to control devices by simply thinking about them, and there’s already been a successful test.

This technology will develop further without a doubt, and AI will ultimately be a part of that, allowing us to access to computational capabilities of AI while still maintaining our regular human functions. It will expand our memory, allow us to think faster, and even allow us to further equip ourselves with wearable machines that boost various physical capabilities. I also imagine it will help counter diseases like Alzheimer’s.

If we’re to become a space-faring species capable of colonizing planets, moons, and even massive space stations, this technology will be somewhat necessary. I expect three or four generations from now, we’ll be seeing this in a big way. As we develop space flight to the point where we’re comfortably going to Mars regularly, I can see this kind of tech being put on the fast track.

So, I wouldn’t look at AI as a threat to our species. It will present problems here and there as humanity adjusts to living alongside it, but these are problems we can solve without shooting at it.